They shared the error logs showed they exceeded the default Max Connections.

We both made the mistake of assuming this was just business growth (more sites, sites are doing better, etc.) and just increased the settings to where the client and their customers were happy.

Close to 24 hours pass, and the client puts in another trouble ticket stating the sites are all down again.

This doesn’t make sense. So let’s dig deeper.

Tip #1 – Let’s find out what IP addresses are connecting to non SSL-based sites by running the following command on the server (root privileges):

netstat -ntu | grep “:80” | awk ‘{print $5}’ | cut -d: -f1 | sort | uniq -c | sort -n | more

In the client’s case the list was very long.

Tip #2 – Let’s find out how many unique IP addresses are on this list (please note as time passes between commands, there will not be a 100% correlation — numbers will be approximate) by running the following command on the server:

netstat -ntu | grep “:80” | awk ‘{print $5}’ | cut -d: -f1 | sort -u | wc -l

In the case of this particular client, the number was close to 3,000.

Between those two pieces of information and knowing most of our other clients — including some that host a very large number of sites per server — where we might see a high of “350” (compared to towards 3,000), something is amiss.

Tip #3 – Let’s see if we can narrow down if a particular site on the server is being attacked or otherwise abused by running the following command on the server:

/usr/bin/lynx -dump -width 500 http://127.0.0.1/server-status | grep GET | grep -v unavailable | awk ‘{print $12}’ | sort | uniq -c | sort -rn | head

NOTES:

(a) You may need to install Lynx. If you are on CentOS this can be as easy as “yum install lynx -y” and then chmod 700 /usr/bin/lynx

(b) If you are running Cpanel, the syntax to use changes lightly to

/usr/bin/lynx -dump -width 500 http://127.0.0.1/whm-server-status | grep GET | awk ‘{print $12}’ | sort | uniq -c | sort -rn | head

(c) If you are not getting any output, check that Apache server status, then check your Apache configuration to make sure mod_status is included. A secure method for getting status in Apache is as follows (in your httpd.conf or included file within your httpd.con file):

ExtendedStatus On

<Location /server-status>

SetHandler server-status

Order allow,deny

Allow from localhost

</Location>

<Location /server-info>

SetHandler server-info

Order deny,allow

Deny from all

Allow from 127.0.0.1

</Location>

(d) if all of the above is in place and you get a default page when using the lynx command, then check your local DNS resolution in /etc/resolv.conf; you should be able to dig localhost and have it return 127.0.0.1

Now, in the situation with this client the output was “974” for one domain with the next highest competing domain being at “5”. In this case, we had a hit.

Logging into the end user customer control panel for the domain in question showed that the customer was close to 30 GB of traffic when a normal month was less than 5 GB.

Looking at the site logs showed close to 8,000 unique IP addresses; each one grabbing an image in the image directory.

Change over to the image directory and confirm the image in question was really an image; find a number of C SHELL hacker applications and clean up.

Perusing the site showed our client’s customer was running WordPress 3.3.1 (while 3.4 just came out this past week, 3.3.2 contained security fixes; and chances are high the customer had outdated themes and plugins as well).

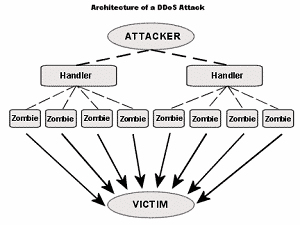

I asked our client to check if their data center had anything in place to help with a DDoS attack of approximate 8,000 IP addresses where the the approximate bandwidth being utilized was 10 to 15 GB per day.

The data center came back stating it was not a DDoS attack; everything is fine.

While I realize the phrase DDoS can be over used, if close to 8,000 unique IP’s attacking a single resource is not a DDoS, I’m not sure what to say.

Just like with President Obama stating “The private sector is fine” while he schmoozes with the Hollywood elite doesn’t make it so… neither does the data center stating “everything is fine” when all sites are down… doesn’t change reality.

The customer was not in a position to migrate from the data center.

The domain being attacked was on a shared IP address (so null routing the IP would cause issues for all of the other customers).

Is there anything that can be done?

What I’m about to share in terms of additional tips worked for this customer because they have 16 GB of RAM on their server; and it’s a relatively new server. Use what I’m about to share with caution if your environment has a lot less RAM.

I appreciate the APF software firewall and the CSF software firewall. Each one has their pros and cons.

From having worked extensively with APF for years, I knew in advance that APF handling approximately 8,000 deny’s would be pushing it. So I switched the customer from APF to CSF and modified DENY_IP_LIMIT in /etc/csf/csf.conf to be 8000 compared to teh default.

Tip #4 – to create the list of IP’s to block I ran the following command (log file path and log file name changed to protect client and their customer privacy):

cat /full-path-to-site-access-log | awk ‘{print $1}’ | sort -u | awk ‘{print “csf -d ” $1 ” DDoS attacking IP against [customer domain name]”}’ > /root/csf.list

Then I ran

sh /root/csf.list

followed by restarting Apache

A few minutes later, the close to 8,000 IP addresses were now blocked and the entries properly showed up in /etc/csf/csf.deny

NOTES:

(a) The actual syntax you would use for tip #4 may vary based on how the log file is formatted. In this particular case the first entry in the access log was the IP address of the attacker.

(b) Whether it is from an article from Dynamic Net, Inc. or from other sources, you should always work through an understanding of the command rather than blindly copying and pasting.

The result, at least for now, is that our customer has a server able to meet the needs of the customers that were not under the DDoS attack.

Since this entire article is oriented towards geeks, the RAM utilization for having that many IP addresses in a software firewall did skyrocket.

The server normally uses 3 GB to slightly under 4 GB of RAM.

CSF when loaded with the close to 8,000 IP’s ended up with close to 17,000 rules (each deny created two rules).

This caused the RAM utilization to cap at the 16 GB and just slightly (less than a few MB) eat into swap space.

Server load several hours after having CSF block the known IP’s stood under 1.00.

Lessons learned include the following:

- If Apache max clients is normally 150 and under, and you need to set it to 1024 to have everything appear at peace, there maybe an ice berg (i.e. DoS or DDoS).

- If your server has the RAM and CPU power, you could operate 16,000+ iptable rules

OTHER:

There are data centers that specialize in anti-DDoS as well as vendors who sell anti-DDoS appliances. By no means am I sharing the various steps in the article as a replacement to either.

However, if you are a small business hosting provider where you need to make every dollar count; and, you get hit with the unexpected, sometimes a DIY (granted, in this case they hired us to handle all of this for them) works well enough to get you through.

My hope is that if you have gotten this far in the article, you are going to test out the various tips to see what the output might be on your server, dig deeper, and maybe put them aside for a day you hope doesn’t happen… but when it does… you know have some, hopefully handy, tools to make your life somewhat easier during that stressful time.

Contact us if you are interested in learning more about our managed services.

Do you have some tips to share about useful Linux commands to track down a potential DDoS or how to handle one in a pinch? Please comment.